So why do we need a volatile keyword

Most of the time I am working on a highload JVM based

backends and for sure I know the keyword

volatile. If you google the meaning

, then probably you would find something related to

caching. I did too, and it didn't satisfy my

curiosity ,so I had to go deeper, way deeper. So why not

share my findings

here so curious readers can get a better view on the

concurrent

nature of Java.

It starts with caches

Before explaining what volatile does it would be better to understand what problem it solves in the first place. Let's start with a small code sample

class Counter{

private int cnt

public void increment(){

this.cnt++

}

public int get(){

return this.cnt

}

Straight forward counter that allows you to get and increment

the number. But what's going on in terms of CPU ? The

silicon mafia

ships around 4 CPU cache levels between CPU and RAM.

First level is located as close to the CPU as possible and

usually has the smallest size(around 16 kilobytes).

The most frequently used variables are stored there.

Each next level has a bigger size but has a higher access

time than each previous level.

Moreover, nowadays most hardware is shipped with multiple

CPU Cores.

CPU cores which may or may not share the same CPU caches.

Other than that, each CPU has its own registers. CPU

Register is a unit of storage that is accessed primarily

by instructions that directly refer to it by name(for

example R0 or register 0)

Now

let's return to the example above that performs a simple

increment this.cnt++. To read a single

variable means to check if it's present in CPU

level 1 cache, most probably it isn't there if we just

started

the program. Next, the second level cache has to be checked

and

so

on. In a Single Core environment the process would end here

and

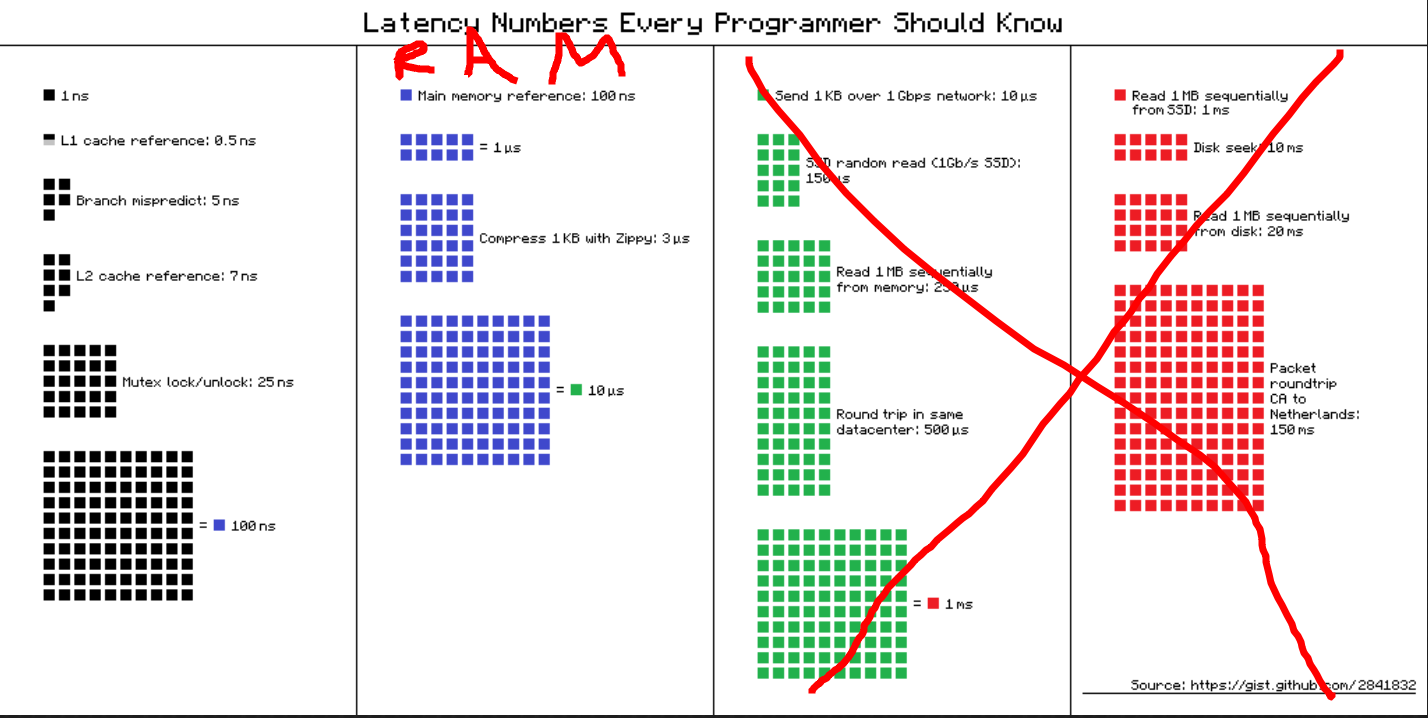

Cpu will have to load the variable from RAM. Just for you to

understand the latency it takes for CPU to check each

individual

cache

and RAM here is the nice diagram.

1 ns for level 1 Cache read versus 100 ns for RAM read, looks impressive, isn't it ? . However, for Multi-CPU systems things become more complicated. Before incrementing the counter, CPU has to ask other cores using system bus whether they have a modified version of this counter in their own caches. If one CPU comes back and says , yes I do, then all cores have to be synchronized with this modified counter(some hardware producers actually make it possible to read this value directly from system bus which eliminate the path through main memory). Now CPU can increment the counter.One thing that I misunderstood is that this variable has to be flushed to main memory after each synchronization which isn't correct(will talk about it later). There is a thing called MESI(I know what you think, but it's another Mesi). It's a cache coherence protocol that basically represents the states that a cached variables can have

- Modified - M. The cache line present only in the current cache, and it was modified

- Exclusive - E. The cache line present only in the current cache, and it wasn't modified

- Shared - S. The cache line may be stored in other Core's caches. But all of them matches the value from Main memory

- Invalid - I. When Cache line was marked as Modified(M) or Exclusive(E), the copies of this variable in other caches will be marked as invalid

MESI makes all caches to be coherent.

So now let's google a volatile keyword in Java and the first example you will encounter covers volatile boolean variable

public class StoppableTask extends Thread {

private boolean pleaseStop;

public void run() {

while (!pleaseStop) {

// do some stuff...

}

}

public void tellMeToStop() {

pleaseStop = true;

}

}

We have a Thread(first) that does some job in an infinite

loop,

and

then let's assume that another Thread from completely

different Core calls tellMeStop that will

change

the value of boolean variable which will stop the infinite

loop the first thread is running

. If you don't declare this variable as volatile ,then the

first

Thread can cache this boolean variable in CPU cache and

reread it from there, the thing that another thread changes

the variable won't affect the first thread because the

variable

will still be read from local cache. That's how most

articles explain the volatile keyword, use it

or

otherwise Thread will cache the variable.

But wait, didn't I just told you about this

MESI thing and that CPU can't modify

variable unless all other copies are synchronized ?

How is it even possible that Thread can cache a value with a

cache coherence protocol? That's why I was confused, and it

took me

a

while to get an answer so here it is. The thing is,it's not

even about CPU caches, it's about CPU registers. When

you compile and run your Java application , javac and then

JIT makes a completely valid assumption that your program

will be executed in a single Threaded environment. As a

result, the compiler can make a really great optimization.

Let's

take a look at the class above after compilation

public class StoppableTask extends Thread {

public static void main(String[] argc){

new StoppableTask().run();

}

private boolean pleaseStop = false;

public void run() {

while (true) {

// do some stuff...

}

}

public void tellMeToStop() {

pleaseStop = true;

}

}

Compiler is smart enough to see

that main thread doesn't call tellMeStop which

means there is no way a boolean variable can be modified.

This variable will then be stored in the CPU Register. CPU

register is not the same as CPU cache, it's a small storage

which is located

Within the CPU. The access from this

storage in much faster than reading from CPU cache.

But what if we are in a multithreading environment? Still,

even if an application uses multiple threads, compiler is

allowed to make an assumption that those threads won't

communicate with each other using Object variables so each

Thread is allowed to create a local copy of every

Object/primitive they are working with and store them in CPU

registers where those threads are running.

Remember, all caches are coherent but CPU registers are not.

If thread writes something to a variable and it happens that

this variable is still inside of a CPU register then there

is no way other Threads which are running on different CPUs

will see the change.

Is there a way to prevent the compiler storing this variable

in the Register ? Yes , and it's volatile

Volatile

So now when you know what MESI is, what is a CPU Register

and how the program works

within

multiple Cores , let's talk about volatile.

The volatile keyword is nothing more than

telling compiler that storing variable in the Register is

prohibited. If thread wants to read a variable it has to

read it from cache(unless the variable isn't there in which

case Main memory would be involved), if Thread wants to

modify the variable then it has to write it back to the CPU

cache where it was stored and then MESI will synchronize

caches from other CPU Cores.

Ok, so what If

you annotate all variables as volatile in a single threaded

program ? As I said , the Thread won't be able to store the

variable in the Registers but there is another problem.

Volatile in Java has another property which

prevents instruction reordering.

public class Reordering {

private int foo;

private int bar;

public void run() {

this.foo+=1;

this.bar+=2;

this.foo+=2;

}

}

Let's look at the code above.For a moment , forget about CPU

registers. Two variables are incremented

sequentially, in terms of a processor, foo will

be loaded into

the cache and incremented by one, but let's say the cache is

full now(which is not , remember level 1 cache is 16 kb and

there are different cache levels, but let's pretend we can

only save one int in the cache) and CPU can't load

bar into the cache

so OS decided to flush foo back to main memory.

Then bar was loaded and incremented by two and

again, we

can't load foo into the cache to increment it

by

two

because bar occupied entire cache size so it

has to be flushed. It

involves a lot of rounds between RAM and CPU caches.

Can we make it better ? Of course , compiler will

reorder your instructions to make it faster.

public class Reordering {

private int foo;

private int bar;

public void run() {

this.foo+=3;

this.bar+=2;

}

}

So foo will be loaded only once and be

incremented by 3

in one instruction.Because for single threaded program it

doesn't

matter whether

you increment foo by 3 in one instruction or

with two

separate ones, the end result is the same. However,

for multithreading programs it's not the case. If compiler

reorder instructions the second thread can see the

following state entering the method {foo = 3, bar =

0} which doesn't make any sense if you look at

the source code. So the second property of

volatile apart from visibility is preventing

instruction reordering

public class Reordering {

private volatile int foo;

private int bar;

public void run() {

this.foo+=1;//can't be reordered

this.bar+=2;

this.foo+=2;

}

}

So to answer the previous question, volatile in single threaded environment will prevent reordering which will make your program slower, so my advice is , do not use it as long as you don't use multithreading

Happens Before

Apart from volatile there are other ways to make changes from one thread to be visible to others . All these ways are combined into a set rules called Happens-Before guarantee. Let's take a look at some of them

- Synchronized block/method - All writes inside of a

synchronized method/block will be visible to another

Thread that

starts executing this block/method

public class SyncBlock { private int first; private int second; public void synchronized doSmth() { this.first++; this.second++; }//after exiting, both variables will be visible to all threads that will run enter this block } - All write to volatile variables happens before read from

this volatile variable. And this one is handy because it

also works for all nonvolatile writes that happened

before write to a single volatile variable

public class VolatileWrite { private volatile int first; private int second; public void doSmth() { this.first++; this.second++; // second thread will see updated version of both variables because incrementing // first counter happened right before an increment of volatile varaible } } -

All writes happened before

Thread.start()will be visible to newly created Threadpublic class ThreadVisibility { private static int counter= 0; public static void main(String[] args) throws Exception { counter++; new Thread(()->{ System.out.println(counter); //Second Thread will see a modified counter even //if both threads will be executed by different Cores }, "Second Thread"); } }

False Sharing

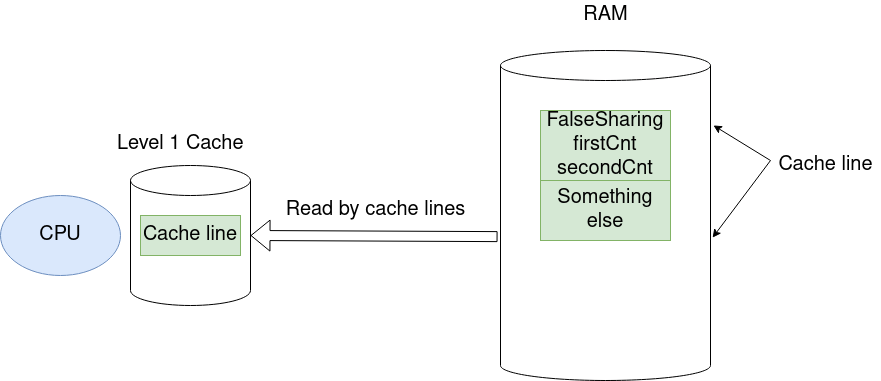

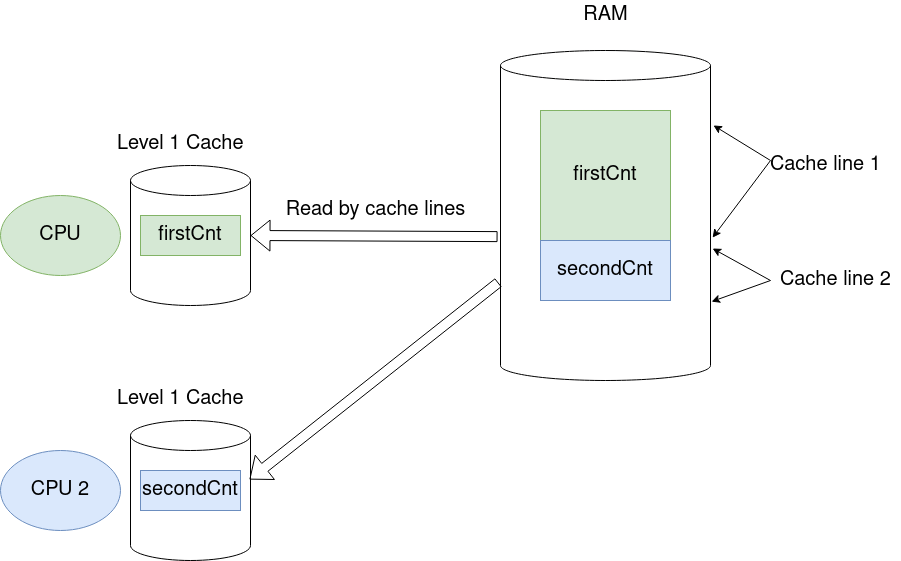

When I described the MESI protocol, I was talking about this thing called a cache line. The thing is , CPU doesn't load data from RAM in terms of variables, it loads data in terms of cache lines. Each cache line represents a contiguous list of memory with predefined size(for example on Intel architecture the cache line is 64 bytes). False sharing is when different variables lie on the same cache line. The False Sharing is a source of performance degradation. So hoes does it look like ?

public class FalseSharing {

private volatile long firstCnt = 0;

private volatile long secondCnt = 0;

public void methodThatRunOnSecondThread(){

this.secondCnt++;

}

public void methodThatRunOnFirstThread(){

this.firstCnt++;

}

}

Let's take a look at the code snipped above. We have a class with two long variables, both of them are volatile. The instance of this class will take up to 32 bytes(8*2=16 bytes for two long variables, plus 12 bytes for object metadata plus the alignment to 8 bytes). Now we have two Threads running on different cores each calling a method that increments a single counter. So without cache lines it will work the following way:

- First thread cached

firstCntin Level 1 cache and started incrementing it - Second thread cached

secondCntin Level 1 cache of another CPU core and started incrementing it

However, CPU works in terms of cache lines and because the instance of a class takes only 32 bytes, it will fit into a single cache line(which is 64 bytes). So now let's see how both Threads will work with same cache line. First thread updates the first counter, but because second variable is stored on the same cache line MESI has to update cache of the second Core and same will happen when second thread updates the second variable. It's a big problem which literally kills the performance because it involves a lot of IO between different Cores. How do we deal with it ? Of course using annotations

Meet @Contended

In order to solve the problem above we can put one counter

into a separate cache line, but counter is a long, and it

only takes 8 bytes. To deal with it, we can annotate the

property of class as @Contended so the single

variable will occupy the whole cache line and with wasted

space

public class FalseSharing {

@Contented private volatile long firstCnt = 0;

private volatile long secondCnt = 0;

public void methodThatRunOnSecondThread(){

this.secondCnt++;

}

public void methodThatRunOnFirstThread(){

this.firstCnt++;

}

}

In this case , firstCnt will lie in a separate

cache line from a secondCnt and so each Thread

can work on it's counter without having to synchronized with

one another

Finale

I hope that now readers can understand what's going on at a hardware level and how some compiler optimization can ruin the correctness of a program execution. JVM is a great platform that encapsulates all kind of complicated things related to hardware, however, in order to understand performance degradation of your app it's obligatory to understand how these things work on bare metal. CPU caches solve the gap between the fast CPU and slow main memory , and data structures which are nicely aligned in cache lines will always benefit from caching . Or as was said by Scott Meyers "Show me your fancy data structure, and I promise , simple array will be faster". One thing I want to mention is that JVM specification doesn't talk in terms of CPU caches or registers. It specifies the semantics based on an abstract machine. It's up to the Java Runtime Environment to execute the code on a concrete machine in such a way, that it fulfils the specification There are some resources I found useful related to CPU cache in terms of Hardware and how JVM works with them. Check them out